Jaeyeon Kim

Ph.D. student at Harvard

Hello, my name is Jaeyeon Kim! I also go with Jay. I’m currently a second-year Ph.D. student at Harvard CS, advised by Sitan Chen and Sham Kakade. I also closely work with Michael Albergo and Yilun Du.

At Harvard, my research focuses on deepening our understanding of modern generative models and pioneering new paradigms for generative modeling. My recent work on Masked Diffusion received an Outstanding Paper Award at ICML!

Previously, I earned a B.S. in Math at Seoul National University, where I worked in Optimization Theory with Professors Ernest Ryu and Asu Ozdaglar. I got A+ in all math courses that I’ve taken!! Earlier in my academic journey, I was an IMO finalist in South Korea.

I was born and raised in South Korea. When I’m not working, you’ll probably catch me outside—traveling, working out, or running. I really enjoy to learn new things in new environments, both in real life and work. Once I grasp the underlying structure, I translate it into my own words and turn it into novel insights!

news

| Jul 12, 2025 | My paper on Masked Diffusion received an outstanding paper award at ICML 2025! This award was given to just 6 papers. (news article, link, post) |

|---|---|

| May 12, 2025 | Two papers (Masked Diffusion, LoRA theory) are accepted at ICML 2025, both as oral presentations! |

| Sep 01, 2024 | I’m starting my Ph.D. at Harvard University, advised by Prof. Sitan Chen and Prof. Sham Kakade. I’m really thrilled to pursue my career path at Harvard University! |

selected publications

-

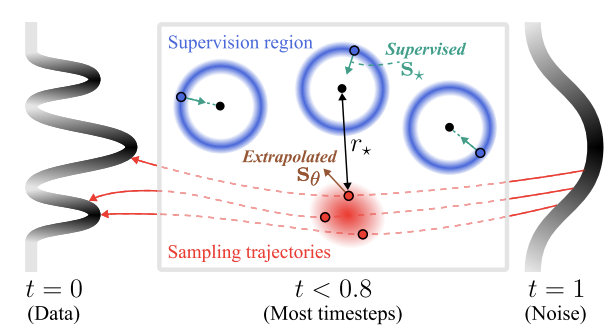

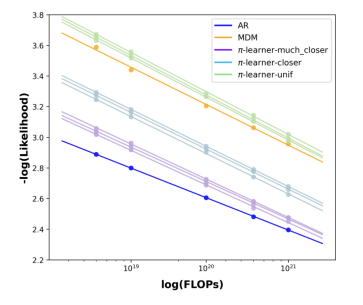

Train for the worst, plan for the best: Understanding token ordering in masked diffusionsInternational Conference of Machine Learning (Outstanding Paper Award), 2025

Train for the worst, plan for the best: Understanding token ordering in masked diffusionsInternational Conference of Machine Learning (Outstanding Paper Award), 2025 -

LoRA Training Provably Converges to a Low-Rank Global Minimum or It Fails Loudly (But it Probably Won’t Fail)International Conference of Machine Learning (Oral), 2025

LoRA Training Provably Converges to a Low-Rank Global Minimum or It Fails Loudly (But it Probably Won’t Fail)International Conference of Machine Learning (Oral), 2025 -

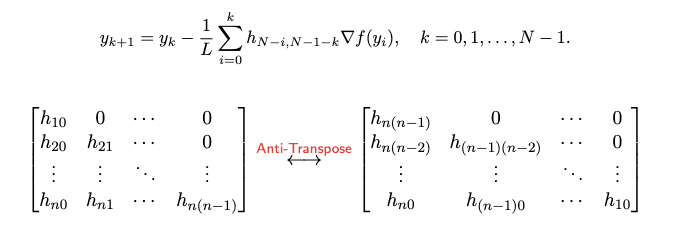

Time-Reversed Dissipation Induces Duality Between Minimizing Gradient Norm and Function ValueNeural Information Processing Systems, 2023

Time-Reversed Dissipation Induces Duality Between Minimizing Gradient Norm and Function ValueNeural Information Processing Systems, 2023